As concerns grow over the harmful effects of social media on children, several European countries are stepping up pressure on the EU to implement stricter controls. Backed by France and Spain, Greece has led calls for a bloc-wide strategy to limit minors’ access to digital platforms, citing mounting evidence linking social media use to anxiety, depression, and poor self-esteem in young people.

In a meeting held Friday in Luxembourg, digital ministers from across the European Union debated the idea of setting a “digital age of adulthood,” a uniform threshold below which parental consent would be required for social media use.

France, Greece, and Denmark advocate for a minimum age of 15, while Spain proposes raising it to 16 — a model already adopted by Australia and currently under consideration in Norway and New Zealand. Despite these efforts, no EU-wide ban has yet been agreed upon, largely due to cultural differences and practical concerns around enforcement.

Still, momentum is building. Danish Digital Minister Caroline Stage Olsen stated, “It’s going to be something we’re pushing for,” underscoring the determination among some EU members to drive regulatory reform.

EU Prepares Age-Verification App

Next month, the European Commission is set to unveil an age-verification tool designed to protect minors online — a move seen as a crucial step in enforcing existing minimum-age regulations, often ignored or easily bypassed by children faking birthdates.

Henna Virkkunen, the EU’s top digital policy official, acknowledged the challenges of implementing uniform age restrictions across 27 nations but maintained that action is necessary. France’s Digital Minister Clara Chappaz described mandatory age checks as “a very big step” toward real accountability, especially considering how children as young as seven currently navigate social media with minimal barriers.

The EU recently issued draft non-binding guidelines for platforms, recommending private account settings by default and tools to mute or block harmful users. These guidelines will be finalized after a public consultation concludes this month.

France Leads the Way — But Hits Regulatory Snags

France has already enacted national legislation requiring parental consent for social media users under 15. However, the law remains in limbo pending approval from the EU.

In parallel, the French government has moved aggressively to block minors’ access to adult content, requiring age verification on pornographic sites. In protest, three major platforms went offline this week. Meanwhile, TikTok responded to pressure by banning the #SkinnyTok hashtag, which had been used to promote extreme thinness.

The Case Against ‘Shadow Algorithms’

Countries backing stricter controls — including Cyprus and Slovenia — argue that platform algorithms expose children to addictive and damaging content. Their joint proposal urges the EU to develop a system-wide parental control app with built-in age checks, ideally embedded directly into smartphones and tablets.

Such changes, proponents argue, are essential not only for curbing screen addiction but for safeguarding children’s mental health, critical thinking, and interpersonal development.

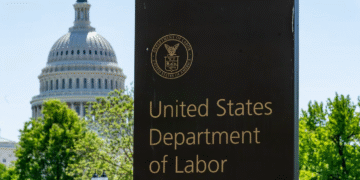

Tech Giants Under Investigation

The push for reform comes as the EU intensifies its enforcement of the Digital Services Act (DSA), a sweeping content moderation law aimed at holding tech companies accountable. Meta (Facebook and Instagram) and TikTok are both under formal investigation, accused of failing to adequately protect minors from harmful content.

In a parallel probe launched last week, EU regulators also targeted four adult websites suspected of not preventing minors from accessing explicit material.

As European lawmakers double down on digital child safety, the pressure is mounting on Big Tech to change its algorithms — and its approach to protecting the youngest users online.

English

English